November 9, 2015

Last Thursday, we held a LabStorm to discuss how Feedback Labs used the Net Promoter System (NPS) to get feedback on its recent Feedback Summit.

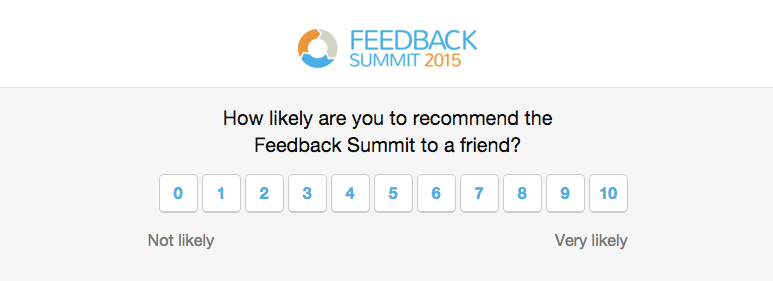

After the Summit, we sent out the following question to attendees:

[On a scale of 0 to 10] How likely are you to recommend the Feedback Summit to a friend?

and then,

Tell us a bit more about why you chose [X score].

Using the Delighted platform, we were able to quickly email everyone and analyze the responses. We received an NPS score of 46.

Even though we had insights from members who have already used the NPS, there was something unique about trying it for ourselves.

Here are some points from the LabStorm discussion, in which we tried to interpret the experience of NPS and what the results mean:

1. Is the system transferable to the nonprofit sector?

Does having a high NPS score map to high impact? Does it matter if the score maps—if it doesn’t, can it be useful for other things? It’s critical to note that there is a difference between more general “system” and more specific “score”. Beyond the absolute score, the system —segmenting our audience into promoters, passives, and detractors—did help us identify useful areas for improvement. The comments explaining why a score was given provided the most valuable information.

But the score itself didn’t tell us a whole lot about impact or other things. We tried to look for other conferences’ NPS scores to benchmark, without much luck. This could be because annual conferences aren’t the best thing for the NPS. The lengthy time between this summit and our next doesn’t provide the opportunity to rapidly iterate and improve.

2. Is the question transferable to the nonprofit sector? If not, how should we tweak it?

Delighted, as a platform, is extremely easy to use both for surveyors and respondents. In particular, we’re impressed that respondents can answer the NPS question directly in their email.

One limitation, however, is that we couldn’t change the language of the NPS question at all. So, while a classic NPS question asks how likely you are to recommend X to a friend, we might have liked to put “colleague” instead.

3. What is the best way to disseminate the score?

What should Feedback Labs do with the score? We want to be open and transparent about it to encourage others to try NPS and to contribute to a benchmarking pool. But does making the score public matter? After all, it’s not supposed to be about the score but internal relative improvement over time.

If benchmarking were, in fact, useful, I’d ask: how do I know what to compare myself to? I can see how rental car companies benchmark against each other but what would we benchmark our Summit to? Are other conferences similar enough for benchmarking to make sense?

Maybe rather than benchmarking scores against one another, it would be useful to benchmark rates of improvement across organizations.