Author: Renee Ho

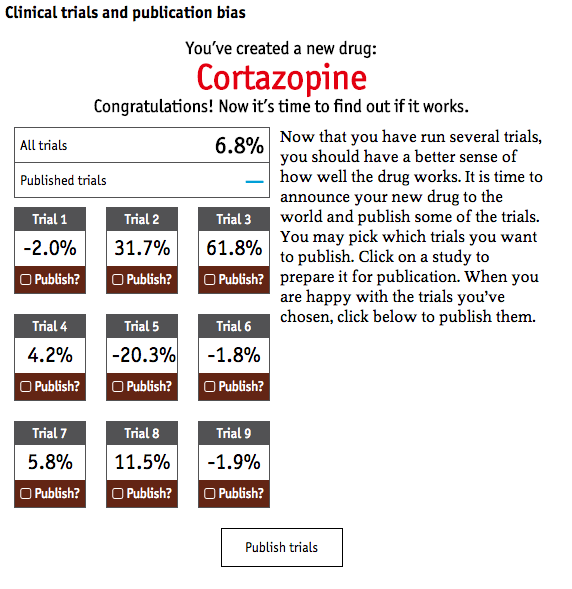

You’ve created a new drug: Doraxepam. You run a few trials to see if it works.Trial 1: -18.6%. Ooh. Not that good. Better to not make that public and run a few more trials.

You run a few more trials and get effectiveness results that run from -22.8% to 54.5%. You publish only certain results, getting your drug an average rating of 38.9% compared to just 10.5% had you published all of your results. This way, you can sell more of your drugs even if it may not help patients.

According to Will MacAskill, author of Doing Good Better, ‘”Effective altruism is about trying to do the very most good that you can, making not just a difference but the most difference you can, and then taking an evidence-based, scientific approach.”

We completely agree. Americans gave $358 billion to charity in 2014. We need to at least try to know if this money has any impact.

But what exactly is an “evidence-based, scientific approach”? What exactly is good evidence?

Based on his organization’s (80,000 Hours) close collaboration with Giving What We Can and GiveWell, we might conclude that “evidence” often means the average results that come from randomized controlled trials (RCTs). Adopted from the use of clinical trials in medical science, RCTs are now pervasive in the movement for evidence-based aid and philanthropy.

What happens when good evidence turns out to be bad?

It’s not totally clear how RCTs took over as the “gold standard” of evidence. What is clear though, is they ‘ve been elevated on a pedestal that has some shaky legs. RCT findings don’t necessarily make for great evidence.

There are lots of reasons why this is the case (see upcoming Closing the Loop article) but let’s focus on one here: publication bias.

According to a July article in the Economist, “Failure to publish the results of all clinical trials is skewing medical science…. Some estimates suggest the results of half of all clinical trials are never published. These missing data have, over several decades, systematically distorted perceptions of the efficacy of drugs, device and even surgical procedures.”

John Ionnadis, meta-science researcher, has shown us that most published research findings are false and there are many highly cited studies that are subsequently contradicted in other studies.

The Economist also created a fun, clinical trial simulator that lets you play the system by publicizing results in favor of your own product. It’s a problem when you shine a light at only things that you want, as an academic or pharmaceutical company, to see.

The point is “evidence” is a tricky, politicized thing. How do we know what we know? While most of us mean well by supporting an evidence-based, scientific approach, it can often backfire if we are confident about “evidence.” The researcher’s and pharmaceutical companies’ interests aren’t always aligned with the end-users’ interests.

Aid and philanthropy, like medicine, needs to look to additional forms of evidence, other forms of knowledge and power, and still maintain a strong “evidence-based, scientific approach.” Beneficiary feedback can be a strong form of evidence and be part of a scientific method.

It’s time we focus less on what the “experts” say and a little more on what the “beneficiaries” say. Maybe the beneficiaries can even be… the experts.